Fist I install OpenCV python module and I try using with Fedora 25.

I used python 2.7 version.

[root@localhost mythcat]# dnf install opencv-python.x86_64

Last metadata expiration check: 0:21:12 ago on Sat Feb 25 23:26:59 2017.

Dependencies resolved.

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

opencv x86_64 3.1.0-8.fc25 fedora 1.8 M

opencv-python x86_64 3.1.0-8.fc25 fedora 376 k

python2-nose noarch 1.3.7-11.fc25 updates 266 k

python2-numpy x86_64 1:1.11.2-1.fc25 fedora 3.2 M

Transaction Summary

================================================================================

Install 4 Packages

Total download size: 5.6 M

Installed size: 29 M

Is this ok [y/N]: y

Downloading Packages:

(1/4): opencv-python-3.1.0-8.fc25.x86_64.rpm 855 kB/s | 376 kB 00:00

(2/4): opencv-3.1.0-8.fc25.x86_64.rpm 1.9 MB/s | 1.8 MB 00:00

(3/4): python2-nose-1.3.7-11.fc25.noarch.rpm 543 kB/s | 266 kB 00:00

(4/4): python2-numpy-1.11.2-1.fc25.x86_64.rpm 2.8 MB/s | 3.2 MB 00:01

--------------------------------------------------------------------------------

Total 1.8 MB/s | 5.6 MB 00:03

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Installing : python2-nose-1.3.7-11.fc25.noarch 1/4

Installing : python2-numpy-1:1.11.2-1.fc25.x86_64 2/4

Installing : opencv-3.1.0-8.fc25.x86_64 3/4

Installing : opencv-python-3.1.0-8.fc25.x86_64 4/4

Verifying : opencv-python-3.1.0-8.fc25.x86_64 1/4

Verifying : opencv-3.1.0-8.fc25.x86_64 2/4

Verifying : python2-numpy-1:1.11.2-1.fc25.x86_64 3/4

Verifying : python2-nose-1.3.7-11.fc25.noarch 4/4

Installed:

opencv.x86_64 3.1.0-8.fc25 opencv-python.x86_64 3.1.0-8.fc25

python2-nose.noarch 1.3.7-11.fc25 python2-numpy.x86_64 1:1.11.2-1.fc25

Complete!

[root@localhost mythcat]#

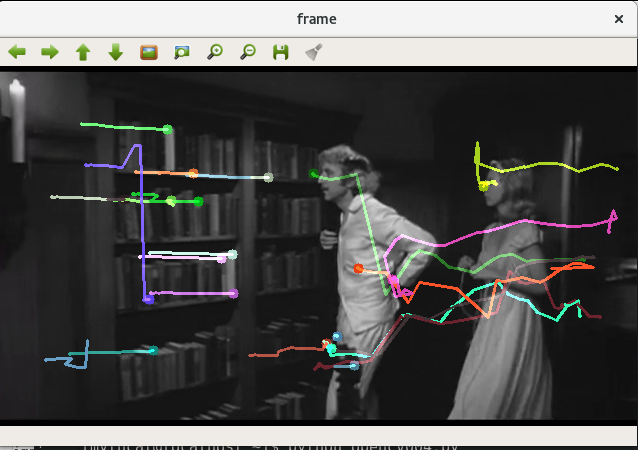

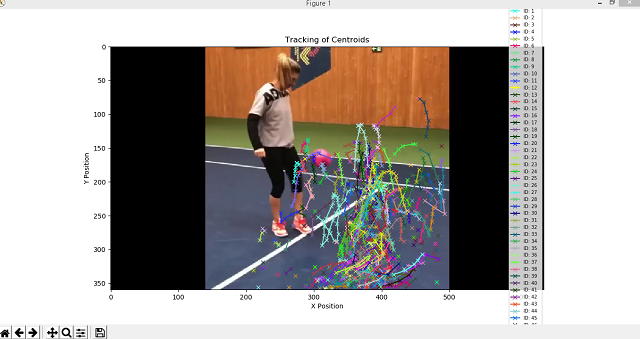

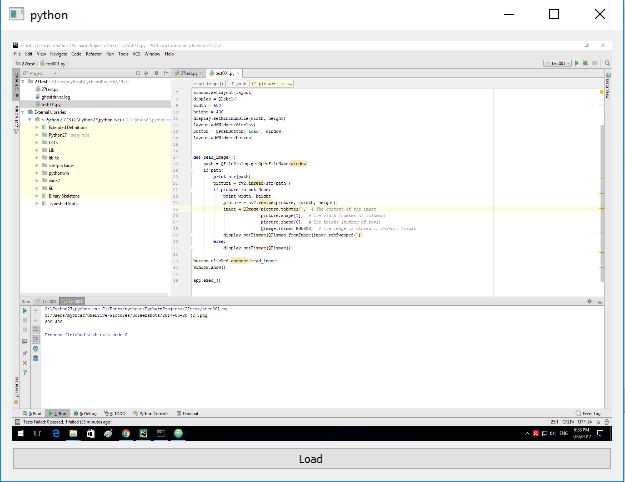

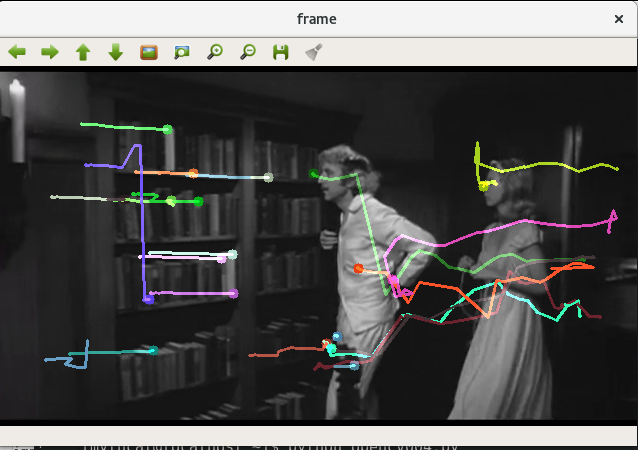

This is my test script with opencv to detect flow using Lucas-Kanade Optical Flow function.

This tracks some points in a black and white video.

First you need:

- one black and white video;

- not mp4 file type file;

- the color args need to be under 4 ( see is 3);

- I used this

video:

I used cv2.goodFeaturesToTrack().

We take the first frame, detect some Shi-Tomasi corner points in it, then we iteratively track those points using Lucas-Kanade optical flow.

The function cv2.calcOpticalFlowPyrLK() we pass the previous frame, previous points and next frame.

The returns next points along with some status numbers which has a value of 1 if next point is found, else zero.

That iteratively pass these next points as previous points in next step.

See the code below:

import numpy as np

import cv2

cap = cv2.VideoCapture('candle')

# params for ShiTomasi corner detection

feature_params = dict( maxCorners = 77,

qualityLevel = 0.3,

minDistance = 7,

blockSize = 7 )

# Parameters for lucas kanade optical flow

lk_params = dict( winSize = (17,17),

maxLevel = 1,

criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 0.03))

# Create some random colors

color = np.random.randint(0,255,(100,3))

# Take first frame and find corners in it

ret, old_frame = cap.read()

old_gray = cv2.cvtColor(old_frame, cv2.COLOR_BGR2GRAY)

p0 = cv2.goodFeaturesToTrack(old_gray, mask = None, **feature_params)

# Create a mask image for drawing purposes

mask = np.zeros_like(old_frame)

while(1):

ret,frame = cap.read()

frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# calculate optical flow

p1, st, err = cv2.calcOpticalFlowPyrLK(old_gray, frame_gray, p0, None, **lk_params)

# Select good points

good_new = p1[st==1]

good_old = p0[st==1]

# draw the tracks

for i,(new,old) in enumerate(zip(good_new,good_old)):

a,b = new.ravel()

c,d = old.ravel()

mask = cv2.line(mask, (a,b),(c,d), color[i].tolist(), 2)

frame = cv2.circle(frame,(a,b),5,color[i].tolist(),-1)

img = cv2.add(frame,mask)

cv2.imshow('frame',img)

k = cv2.waitKey(30) & 0xff

if k == 27:

break

# Now update the previous frame and previous points

old_gray = frame_gray.copy()

p0 = good_new.reshape(-1,1,2)

cv2.destroyAllWindows()

cap.release()

The output of this file is: