The Google team tell us:

What is BigQuery?

Storing and querying massive datasets can be time consuming and expensive without the right hardware and infrastructure. Google BigQuery is an enterprise data warehouse that solves this problem by enabling super-fast SQL queries using the processing power of Google's infrastructure. Simply move your data into BigQuery and let us handle the hard work. You can control access to both the project and your data based on your business needs, such as giving others the ability to view or query your data.

This tutorial it follows more precisely the steps from here.

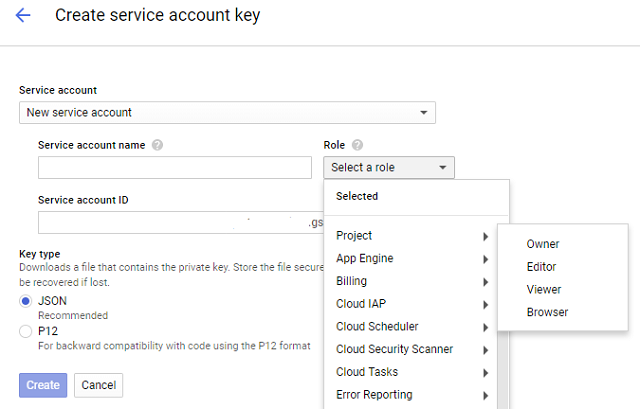

First of all, you must create an authentication file by using the Create service account from your Google project.

Go to Google Console, navigate to the Create service account key page.

From the Service account drop-down, select the New service account.

Input a name into the form field.

From the Role drop-down, select Project and Owner.

The result is a JSON file type (this is for authenticating with google) download it renames and put into your project folder.

Like into the next image:

Now, select from the left area the Library does add the BigQuery API, try this link.

Search for BigQuery API and then use the button ENABLE to use it.

The next step is to install these python modules: pyopenssl and google-cloud-bigquery.

C:\Python27\Scripts>pip install -U pyopenssl

C:\Python27\Scripts>pip install --upgrade google-cloud-bigqueryset GOOGLE_APPLICATION_CREDENTIALS=C:\test\python_doc.jsonLet's see the script:

import google

from google.cloud import bigquery

def query_shakespeare():

client = bigquery.Client()

client = client.from_service_account_json('python_doc.json')

query_job = client.query("""

#standardSQL

SELECT corpus AS title, COUNT(*) AS unique_words

FROM `bigquery-public-data.samples.shakespeare`

GROUP BY title

ORDER BY unique_words DESC

LIMIT 10""")

results = query_job.result() # Waits for job to complete.

for row in results:

print("{}: {}".format(row.title, row.unique_words))

if __name__ == '__main__':

query_shakespeare()C:\Python27>python.exe goo_test_bquerry.py

hamlet: 5318

kinghenryv: 5104

cymbeline: 4875

troilusandcressida: 4795

kinglear: 4784

kingrichardiii: 4713

2kinghenryvi: 4683

coriolanus: 4653

2kinghenryiv: 4605

antonyandcleopatra: 4582